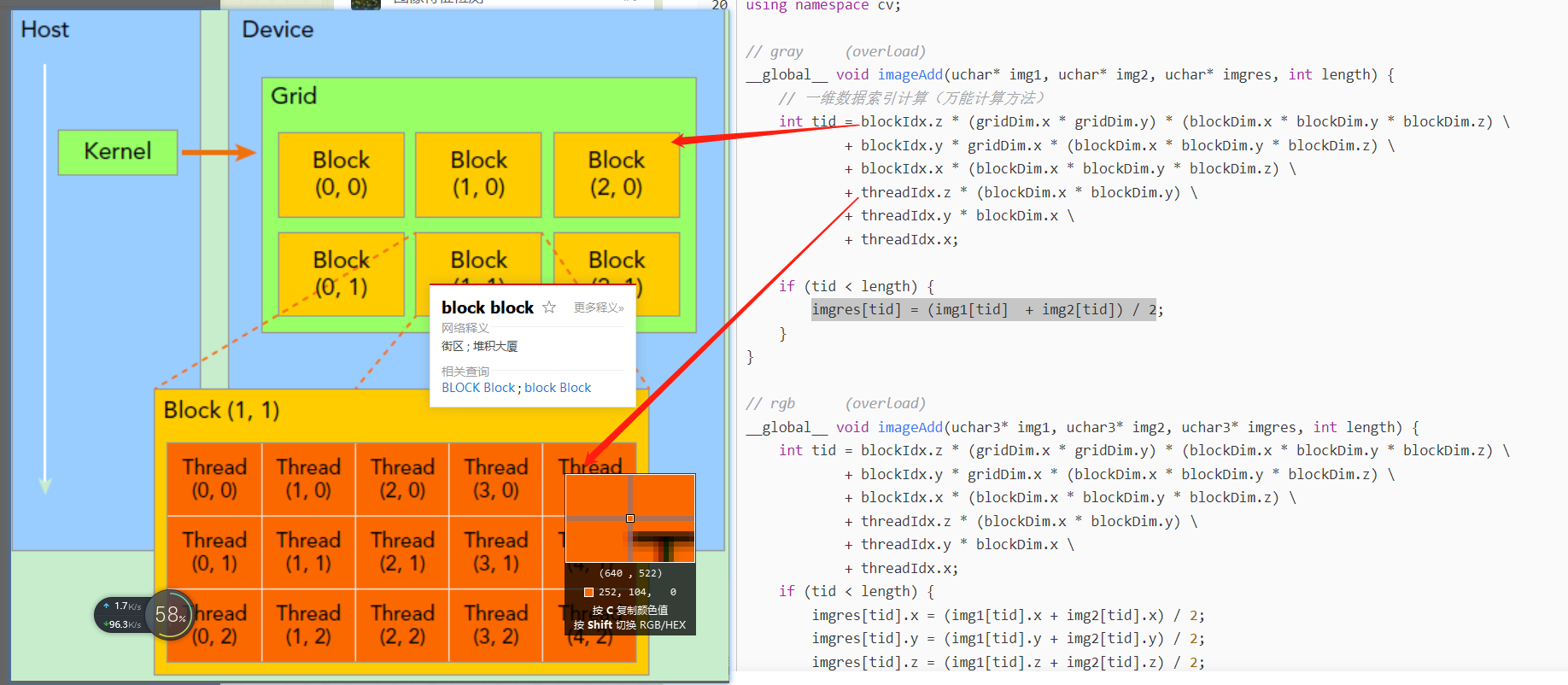

Each group has `blockDim` ( =`tBlock` in host) number of threads and each ! Think of this as there are groups ( =`grid` in host) of threads and those groups are numbered As we used only `x` component to launch the kernel only `x` component is used ! These `blockDim`, `blockIdx` and `threadIdx` are provided defined by CUDA are similar to ! and each thread works on a single element of the array ! Remember the host launches "**grid** of block with each block having **tBlock** threads " This indicates the subroutine is run on the device but called from the hostĪttributes(global) subroutine saxpy( x, y, a)

`global` means its visible both from the host ! `attributes` describes the scope of the routine. ! The kernel i.e a function that runs on the device You can use compilers like nvc, nvc++ and nvfortan to compile C, C++ and Fortran respectively.Ĭompile CUDA Fortran with nvfortran and just run the executable From 2020 the PGI compiler tools was replaced with the Nvidia HPC Toolkit. You may have to restart your system, before using the compilers.Įarlier the CUDA Fortran compiler was developed by PGI. The installation path is usually /opt/nvidia/hpc_sdk/Linux_x86_64/*/compilers/bin, add it to your PATH.

Dim3 grid cuda install#

Install the appropriate Nvidia drivers for your system.the copy operation will wait for the kernels to finish their execution The assignment operator ( =) in CUDA Fortran is overloaded with synchronous memory copy i.e. Usually they are done in synchronous manner. The memory copy between the host and device can be synchronous or asynchronous. When the kernels are launched, the host does not wait for the kernels execution to finish and can proceed with its own flow. Host can launch a group of kernels on the device. When needed the data is copied to the device from host and back. Separate memory are allocated for host and device to hold the data for each of their computation.

Dim3 grid cuda code#

Blocks and Grids can be 1D, 2D or 3D and the program has to written in such way to control over multidimensional Blocks/Grids.įlow of Program: The main code execution is started on the CPU aka the host.

0 kommentar(er)

0 kommentar(er)